Research

AI applications in healthcare and education

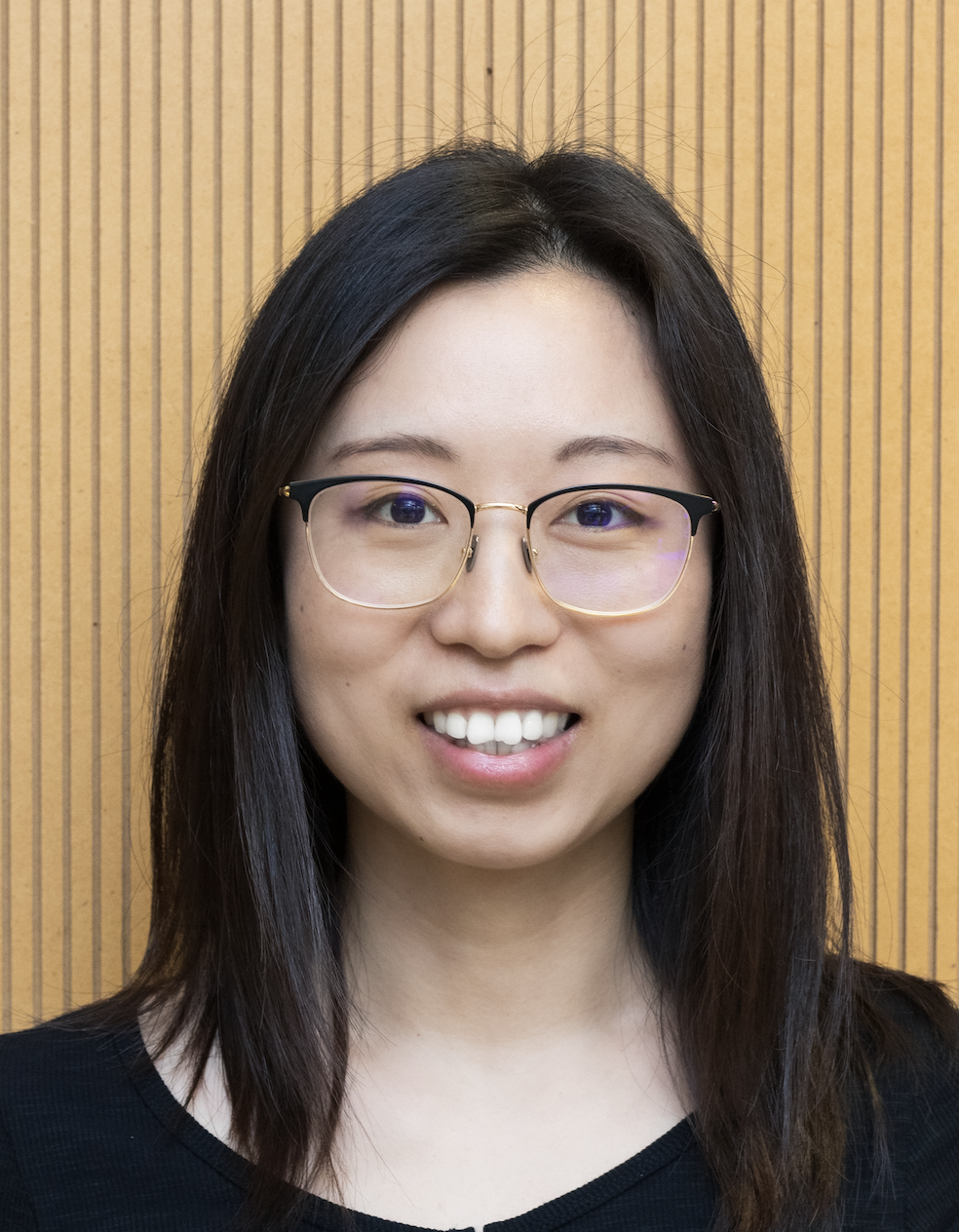

Leveraging artificial intelligence as a foundational model to both understand and augment human cognition. A key direction involves using the striking alignment between large language models and the human brain to create computational analogues of neurological function and dysfunction. For instance, in one line of work, we lesion modular AI systems to simulate and study language disorders like aphasia, then explore rehabilitation by retraining the model's healthy components—effectively using AI as a virtual testbed for developing novel therapeutic interventions. This approach exemplifies my broader mission to move beyond pure simulation and use AI as a transformative tool for building next-generation solutions in healthcare, such as advanced brain-computer interfaces for communication, and in education, through personalized cognitive tools and language learning systems.

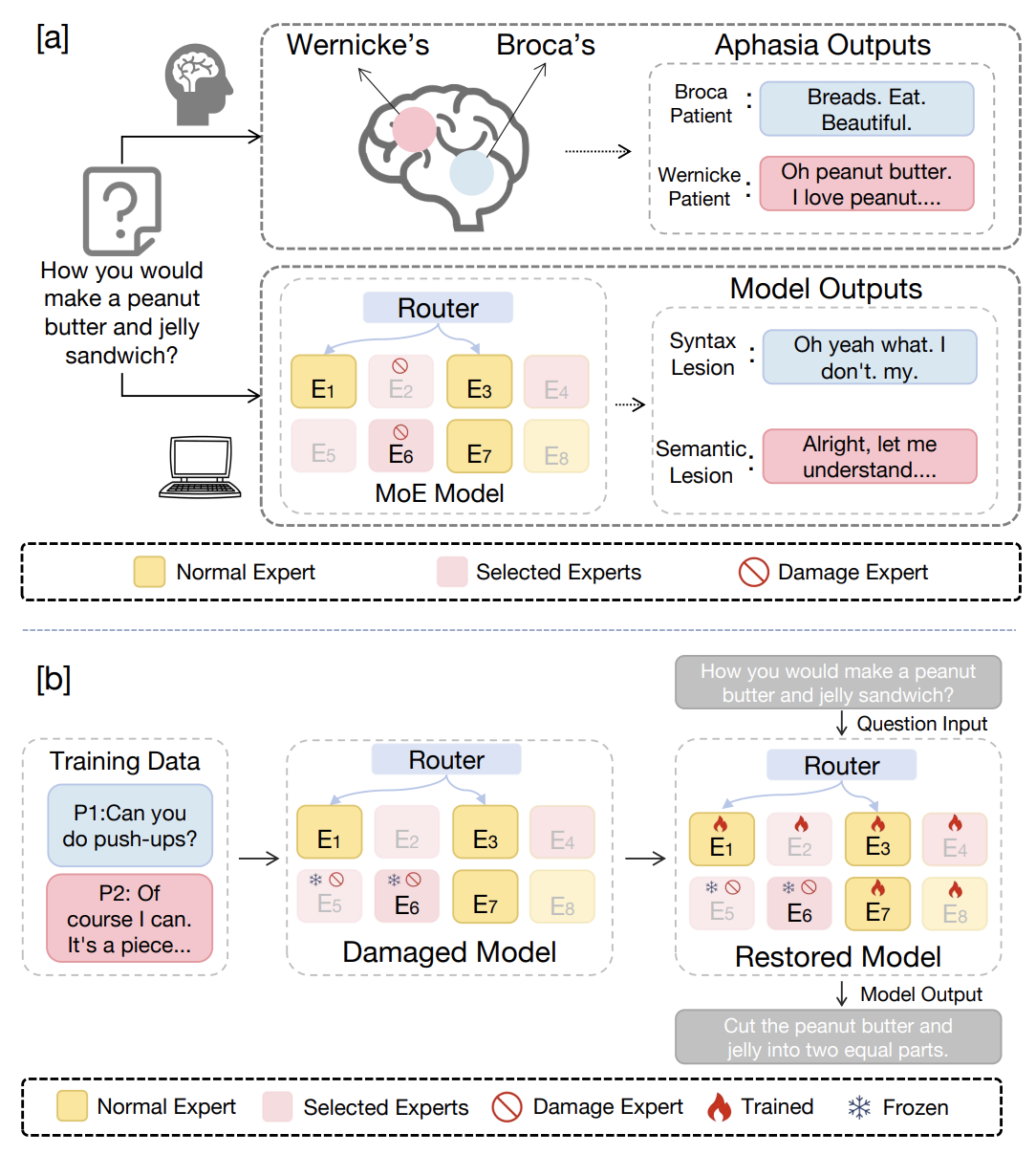

Toward coherent text generation from brain activations

The primary goal of fMRI decoding is to create a correlation between brain activity and stimuli, a crucial step toward developing brain-computer interface systems. Yet, traditional fMRI decoding frameworks face significant challenges: they lack the capability to directly predict words from a vocabulary and to produce coherent text. To address these questions, we proposed employing pre-trained language models to assist in generating fluent and coherent text directly from brain activity. In line with this approach, we introduce the cross-modal cloze task, along with the brain-to-text and fMRI2text tasks.

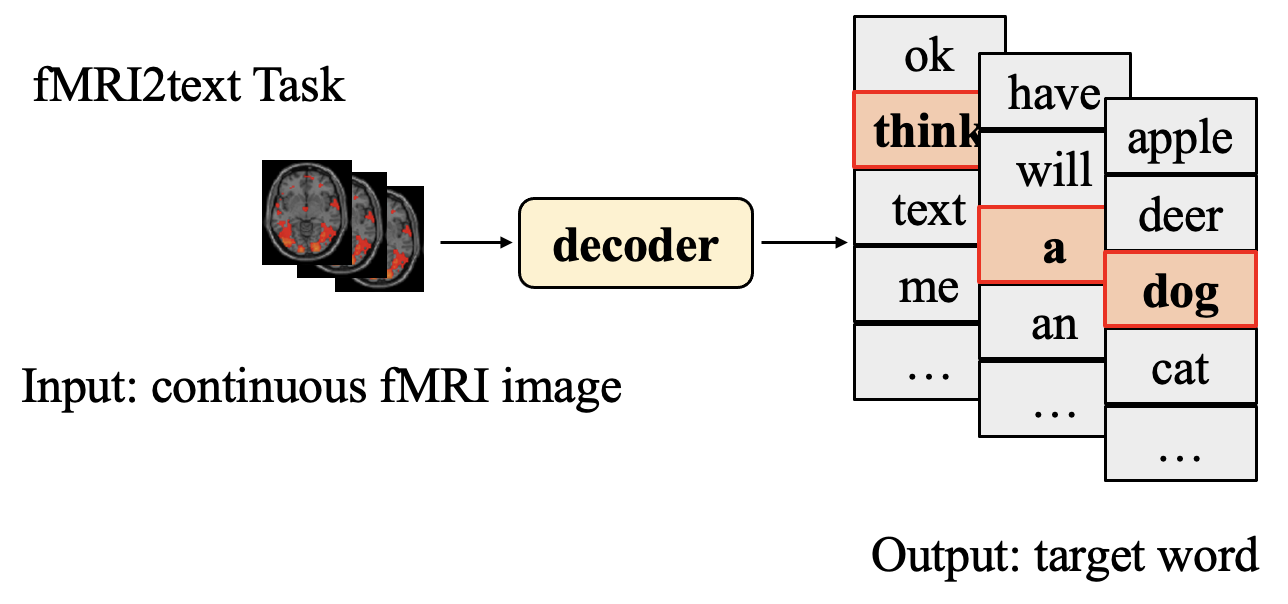

Phrasal composition mechanism of human

Understanding the computational operations involved in conceptual composition is fundamental for theories of language. However, the existing literature on this topic remains fragmented, comprising disconnected theories from various fields. For instance, while formal semantic theories in Linguistics rely on type-driven interpretation without explicitly representing the conceptual content of lexical items, neurolinguistic research suggests that the brain is sensitive to conceptual factors during word composition. What is the relationship between these two types of theories? Do they describe two distinct aspects of composition, operating independently, or do they connect in some way during interpretation by our brain? To probe this, we explored how the mathematical operations explaining the combination of two words into a phrase are affected by the semantic content of items and the formal linguistic relations between the combining items.

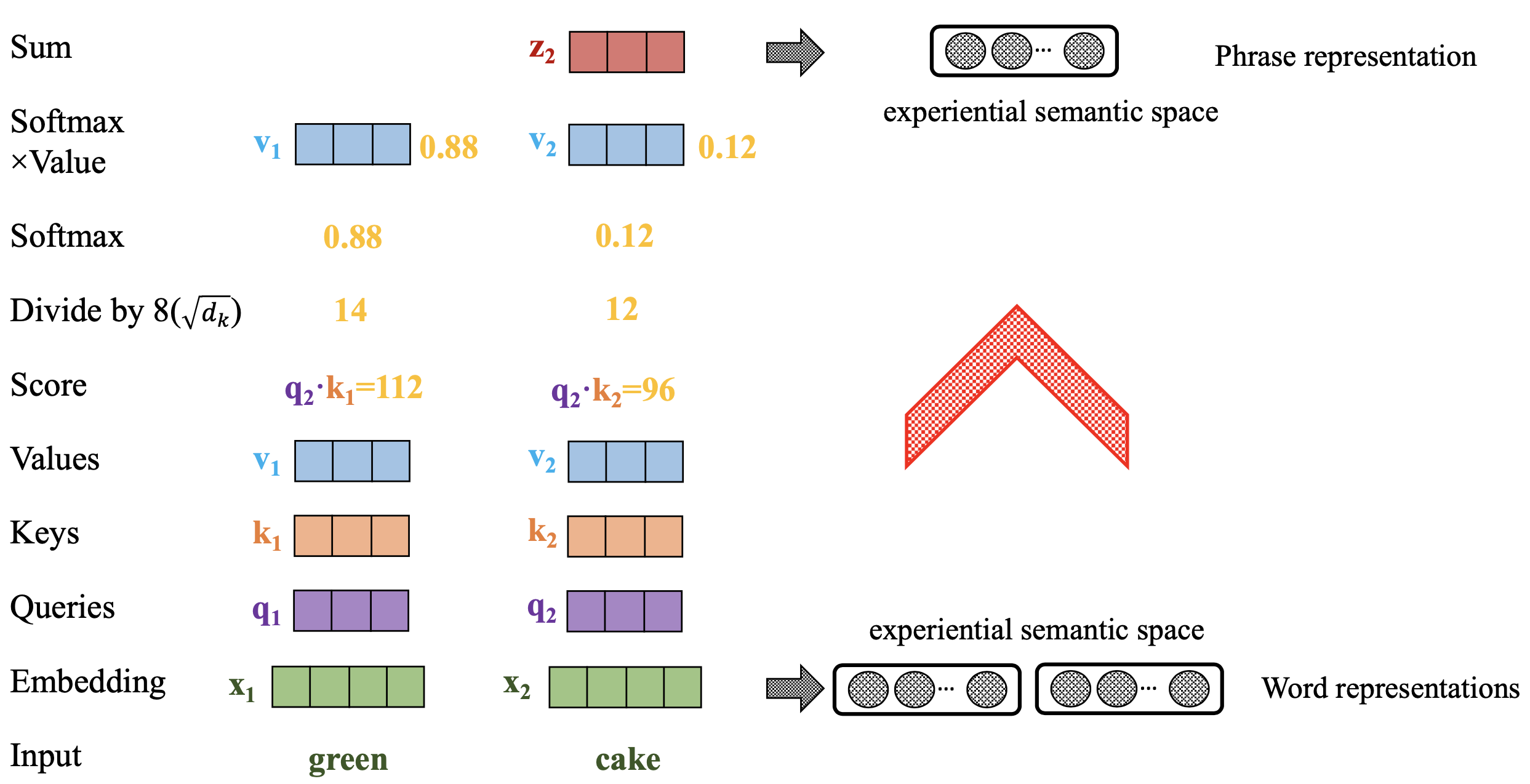

Phrasal composition in transformer models

Transformer models have proven to be an efficient architecture for large language models, enabling them to exhibit human-like behaviors. However, it remains unclear how they combine the properties of individual words. To investigate this, we focus on the simplest compositional unit—two-word phrases—and employ four simple, parameter-free composition operations: addition, multiplication, first word, and second word. This approach serves as the first step toward understanding the composition mechanisms in transformer modules.

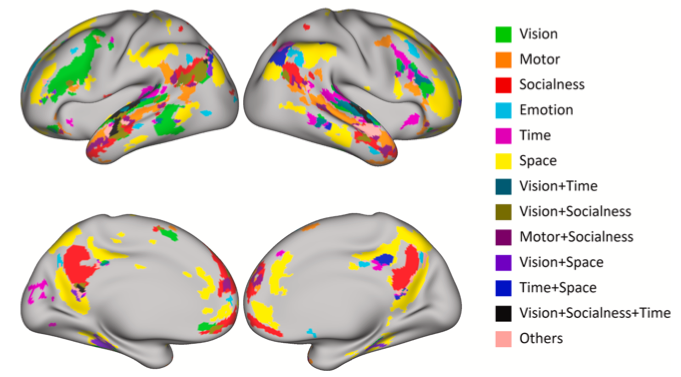

Semantic representation of words in our brain

Multiple sensory-motor and non-sensory-motor dimensions have been proposed for semantic representation, but it remains unclear how the semantic system is organized along them in the human brain. Using naturalistic fMRI data and large-scale semantic ratings, we investigated the overlaps and dissociations between the neural correlates of six semantic dimensions: vision, motor, socialness, emotion, space, and time. Our findings revealed a more complex semantic atlas than what is predicted by current neurobiological models of semantic representation.

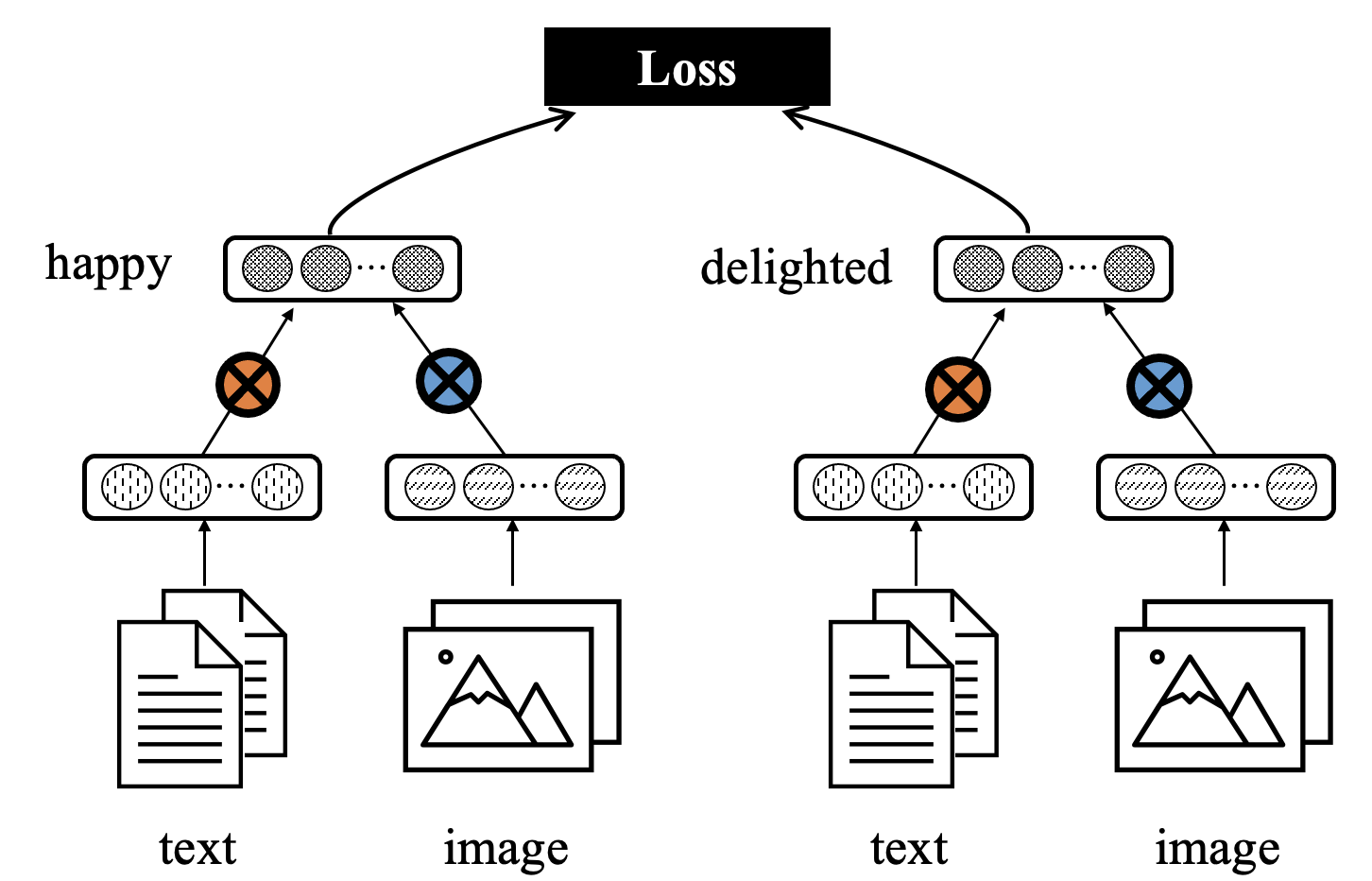

Representation learning of words, phrases, and sentences

Learning appropriate representations for words, phrases, and sentences is crucial for enabling machines to understand language. During my PhD thesis, I developed several algorithms to enhance multimodal word representations, compositional phrase representations, and sentence representations, guided by human attention.